My current work in UniBW through the DFG funded project: UbiHave, we research the use of on-body and environmental sensing technologies to detect and analyse human behaviour different security-critical situations. We research ways to detect and mitigate social engineering attacks targeting users on various platforms (e.g. email, social media, etc..)

We present a novel concept for detecting and influencing driver emotions using physiological sensing for classification and ambient light for feedback. We evaluated our concept with 12 drivers on a driving simulator with a fully equiped car.

We use three ambient lighting conditions (no light, blue,orange). Using a subject-dependent random forests classifier with 40 fea-tures collected from physiological data we achieve an average accuracy of78.9% for classifying valence and 68.7% for arousal. Driving performancewas enhanced in conditions where ambient lighting was introduced. Bothblue and orange light helped drivers to improve lane keeping.

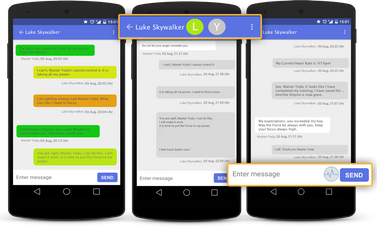

We present a mobile chat application, HeartChat, which integrates heart rate as a cue to increase awareness and empathy. Through a literature review and a focus group, we identified design dimensions important for heart rate augmented chats.

We created three concepts showing heart rate per message, in real-time, or sending it explicitly. We tested our system in a two week in-the-wild study with 14 participants (7 pairs). Interviews and questionnaires showed that HeartChat supports empathy between people, in particular close friends and partners. Sharing heart rate helped them to implicitly understand each other's context (e.g. location, physical activity) and emotional state, and sparked curiosity on special occasions

We explore how state-of-the-art methods of emotion elicitation can be adapted in virtual reality (VR). We envision that emotion research could be conducted in VR for various benefits, such as switching study conditions and settings on the fly, and conducting studies using stimuli that are not easily accessible in the real world such as to induce fear.

We conducted a user study (N=39) where we measured how different emotion elicitation methods (audio, video, image, autobiographical memory recall) perform in VR compared to the real world. We found that elicitation methods produce largely comparable results between the virtual and real world, but overall participants experience slightly stronger valence and arousal in VR. Emotions faded over time following the same pattern in both worlds. Our findings are beneficial to researchers and practitioners studying emotional user interfaces in VR.

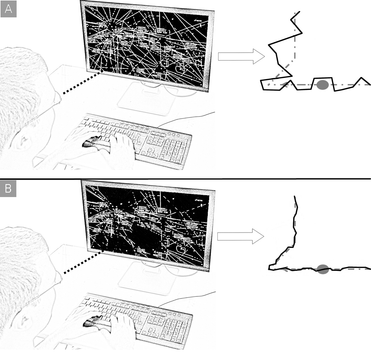

A common objective for context-aware computing systems is to predict how user interfaces impact user performance regarding their cognitive capabilities. Existing approaches such as questionnaires or pupil dilation measurements either only allow for subjective assessments or are susceptible to environmental influences and user physiology. We address these challenges by exploiting the fact that cognitive workload influences smooth pursuit eye movements.

We compared three trajectories and two speeds under different levels of cognitive workload within a user study (N=20). We found higher deviations of gaze points during smooth pursuit eye movements for specific trajectory types at higher cognitive workload levels. Using an SVM classifier, we predict cognitive workload through smooth pursuit with an accuracy of 99:5% for distinguishing between low and high workload as well as an accuracy of 88:1% for estimating workload between three levels of difficulty. We discuss implications and present use cases of how cognition-aware systems benefit from inferring cognitive workload in real-time by smooth pursuit eye movements

The human body reveals emotional and bodily states through measurable signals, such as body language and electroencephalography. However, such manifestations are difficult to communicate to others remotely. We propose EmotionActuator, a proof-of-concept system to investigate the transmission of emotional states in which the recipient performs emotional gestures to understand and interpret the state of the sender.We call this kind of communication embodied emotional feedback, and present a prototype implementation.

To realize our concept we chose four emotional states: amused, sad, angry, and neutral. We designed EmotionActuator through a series of studies to assess emotional classification via EEG, and create an EMS gesture set by comparing composed gestures from the literature to sign-language gestures. Through a final study with the end-to-end prototype interviews revealed that participants like implicit sharing of emotions and find the embodied output to be immersive, but want to have control over shared emotions and with whom. This work contributes a proof of concept system and set of design recommendations for designing embodied emotional feedback systems.

Obtaining information about audience engagement in presentations is a valuable asset for presenters in many domains. Prior literature mostly utilized explicit methods of collecting feedback which induce distractions, add workload on audience, and do not provide objective information to presenters.

We present EngageMeter – a system that allows fine-grained information on audience engagement to be obtained implicitly from multiple brain-computer interfaces (BCI) and to be fed back to presenters for real time and post-hoc access. Through evaluation during an HCI conference (Naudience=11, Npresenters=3). We found that EngageMeter provides value to presenters (a) in real-time, since it allows reacting to current engagement scores by changing tone or adding pauses, and (b) post-hoc, since presenters can adjust their slides and embed extra elements. We discuss how EngageMeter can be used in collocated and distributed audience sensing as well as how it can aid presenters in long term use.

Copyright © All Rights Reserved

Photo: Angelika Wagner